I like to consider myself quite prepared and organised, but when I suddenly had interviews that needed transcribing, I realised I didn’t know much about it at all. I then undertook a magical journey of finding the right way of transcribing, that worked for me. I have detailed it here for those who are looking to read more about alternate methods of transcription.

Attempt 1: Using voice-to-text, speaking the interview out loud

I had read online that voice-to-text options are the way to go for transcribing. I did a quick google and found otter.ai which is a voice-to-text transcriber. Otter.AI is good if you want to record the conversation and have it transcribe for you. Sounds almost too good to be true right?

Right. Unfortunately I had also read that with voice-to-text they don’t quite understand accents that aren’t American. I’m Australian and speak extraordinarily fast. My interview participants speak English as a Second Language (or third, or fourth) so they also have an accent that isn’t American. I knew that this option would probably not work for me, but I had a way around it. I had read the simplest way to work around the accent problem is to put headphones in, listen to the recording on your phone, and speak it out loud to your computer. Quickly edit the mistakes and boom you are done! The site suggested it would only take around 2 hours for a 1 hour interview.

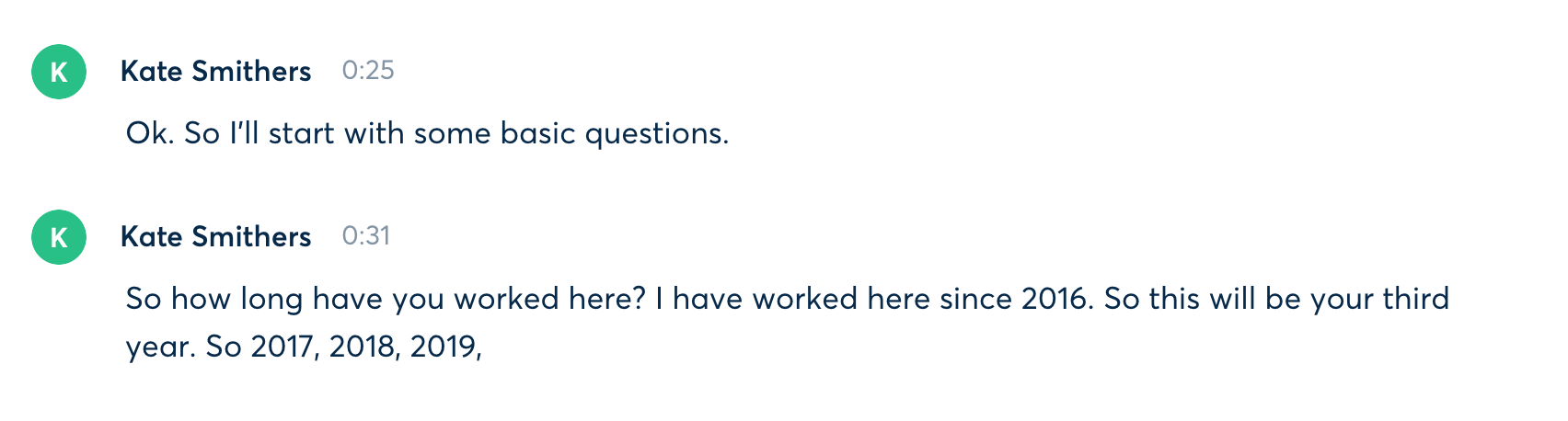

The only problem is that Otter.Ai works from being able to distinguish between two voices to create the transcript. So what I ended up with was a jumble of text that was a mix of both my text, and the interviewees text. Here is an example in which there are two speakers, with their text jumbled (I ask a question, then the participant responds, and I ask a follow up question):

Whilst it cut down on typing, I had a severe amount of editing to do AND the timestamps didn’t align with the interview audio, as when I was speaking out loud I was actually a lot quicker than the interview had been. I think the first 1 hour interview took around 10-12 in total, due to the need to listen and re-listen and correct the words that Otter had heard wrong (quite a lot of words actually – turns out it really doesn’t like my voice, or African place names). Not to mention questions didn’t have question marks, some sentences were cut off etc.

Whilst it cut down on typing, I had a severe amount of editing to do AND the timestamps didn’t align with the interview audio, as when I was speaking out loud I was actually a lot quicker than the interview had been. I think the first 1 hour interview took around 10-12 in total, due to the need to listen and re-listen and correct the words that Otter had heard wrong (quite a lot of words actually – turns out it really doesn’t like my voice, or African place names). Not to mention questions didn’t have question marks, some sentences were cut off etc.

Result: less typing but 10-12 hours (1 of speaking the interview out loud, roughly 10 editing)

Attempt 2: Letting Otter.AI have free reign of the recording

After my first attempt, I decided to letter Otter.AI just have complete control of the transcription. My second interview I wanted to transcribe had a much more British accent and I was hoping that Otter would be able to pick it up. Turns out Otter liked that participants accent, but it still didn’t like mine at all. This was quicker in the editing phase, as it could recognise that we were two different speakers. It was also quicker as I didn’t need to speak the interview out loud, automatically cutting an hour of editing time. However, it still had problems when my participant spoke too quickly or whenever I spoke. To give you an example:

So here I have put white boxes over identifying features (names of people and companies) I have put a clear box of mistakes that would require editing. I have also added two red annotations that I added which shows how Otter didn’t always keep text together (a small, but time consuming issue to fix).

One frustrating thing about otter is that when you click on a word to edit it, it automatically starts playing the recording from that word. I hated this, sometimes I wanted to edit a word without having to fumble around and hit pause.

Result: no typing beyond editing, but 7-8 hours of work.

Attempt 3: Heading back to Nvivo

By now I had also realised a fundamental flaw in Otter.AI. It requires the internet. This is fine for those who are in a country where there is stable internet and electricity, but we have had two scheduled power outages in the last month (lasting all day), and random ones in between (lasting from 1 hour-8 hours). In one of these power outages I realised, I am importing my interviews into NVivo anyway, why not have a go at using it?

I began transcribing in NVivo and it was honestly a breeze. I can choose the speed that the interview plays back (Otter.Ai can do this too) and it is a much simpler interface. I could control when the audio played, could use my keyboard to go back and forth (using the shortcuts my Mac has). I can also use the keyboard to create new transcript lines, meaning I don’t need to pause the audio if I can type quick enough to keep up.

I typed the whole interview in 5 hours. This includes time taken to wander around the house, stare aimlessly into the fridge, and check social media. So considering that professional transcription time is roughly 4 hours for every 1 hour, I’m pretty happy with NVivo. I type quickly and used keyboard shortcuts, so if you are a slower typer this might not be for you.

Result: 5 hours transcription time, no internet required, but lots of typing.

My transcription tips

- Try many methods, don’t just settle for the first one you try, or the one recommended to you.

- Learn the keyboard shortcuts for your program.

- Put your phone in another room so you aren’t tempted to pick it up and get distracted.

- Just do it! It is a pain, and can be quite boring but it won’t happen if you don’t do it!

- Enjoy the time of listening to your interview again.

- Buy a transcription pedal (something I haven’t done, but everyone recommends them).

- Don’t be sad if you don’t have funding for transcription. Being sad won’t make your transcription magically happen (I say this because I did spend a few days wishing the transcription fairy would visit).

Hope this has helped, even if it gives you some potential ideas for transcription. Comment below if you have questions, or you can always connect with me on Twitter.

Pingback: Visualising tools in your PhD – Adventures of a PhD candidate